GENERATIVE AI IS EXPLODING. SO WHAT?

Richard Bagnall, co-managing partner at CARMA, takes a look at the opportunities and risks that come with AI technology.

When one of the ‘godfathers of AI’ says he’s worried, it’s time to pay attention.

In an interview with The New York Times, Geoffrey Hinton spoke about AI’s potential to destabilise the truth, the impact on jobs and becoming smarter than humans. So how is AI evolving, and what is its current - and future - role in communications?

Communication is changing

AI is used in many forms across sectors, but it’s generative AI that’s booming and seized media attention.

Summed up by McKinsey, generative AI describes ‘algorithms' (such as ChatGPT) that can create new content, including audio, code, images, text, simulations, and videos. As such, its applications are endless.

Across media, it can generate new ideas, suggest optimised headlines, or draft social media copy. Bing and Google use generative AI in their search functions and Damien Hirst’s recent AI project cashed over $20m.

One of the big advantages of generative AI is taking on menial tasks for humans. For example, organisations and agencies must often produce large amounts of written content. Generative AI could support here by outlining and structuring first drafts that professionals can quickly polish into final pieces.

Danger ahead

The enormous power generative AI yields means we must scrutinise its use.

One key problem is the data on which generative AI is trained. As AI-generated content rises, so do copyright concerns. An even bigger foundational concern revolves around the bias in AI. Though a piece of code is emotionless, it’s written by biased humans and trained on data that humans provide. Robots trained on AI have been shown to become racist and sexist. Plus, generative AI confidently makes significant mistakes. A news agency tried using ChatGPT to write an article, and the AI got every important fact wrong.

In a world already wrestling with misinformation, the ethical implications are seismic. Not only is there the risk of unintentional misinformation, but generative AI also opens the door to deliberate misuse.

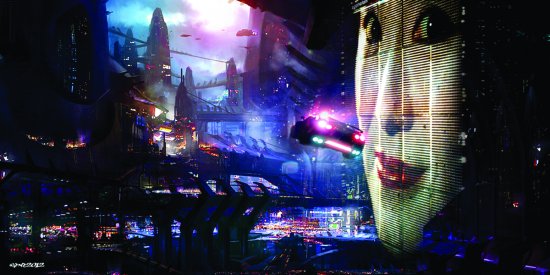

Deepfakes have been around for a while, but platforms like TikTok are popularising the use of AI to mimic celebrities and political figures. The consequences of AI-generated misinformation may be reputational or financial for organisations, but socially and politically, they could be life and death. As Hinton suggests, it risks creating a world where people might “not be able to know what is true anymore”.

Controlling the future

The way generative AI is evolving means legislation is desperately needed. Some governments are getting to work: the European Commission started drafting the AI Act almost two years ago, and it’s now entering the trilogue stage.

In addition to the need for legislation and regulation, there is a growing concern that governments may not legislate fast enough to keep up with the rapid development of AI technology. The pace of technological progress often overtakes the pace of policy-making, and the complexity of AI makes it difficult for lawmakers to understand and regulate. This can lead to a situation where the law is outdated and unable to regulate AI effectively.

But what about worries around job losses that always come with news of tech automation? It’s understandable, but AI’s glaring errors show currently, the communications industry cannot wholly hand itself over to a computer. AI needs rigorous checking, guiding and finessing by human hands.

Humans have created a powerful tool with generative AI, whether it ultimately helps or harms us is decided by what we do now.