PROMOTING ETHICAL AI IN PUBLIC RELATIONS

Artificial intelligence (AI) is one of the most innovative novelties society has experienced since the Industrial Revolution, but the cruciality of being apprehensive towards this new technology is deservingly reiterated by the Chartered Institute of Public Relations (CIPR). A recently released skills guide from the CIPR refers to AI as “the ability to imitate human behaviour...and needs to be understood in many different ways.” In layman’s terms, AI software allows complex, data-packed duties to be executed more efficiently via repetition and data.

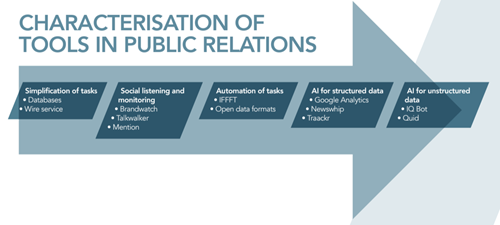

The CIPR launched the #AIinPR panel two years ago in hopes of examining the influence of AI on PR, and further initiating a conversation in the public domain about matters relevant to AI. This panel has separated AI into a five-level scale of characterisations of tools in PR. Subdivisions include the simplification of tasks, social listening and monitoring, automation of tasks, structured data and unstructured data. “There are software tools that simplify tasks, some that automate rote tasks, and more sophisticated algorithms that analyses and predict outcomes, and it is these latter types of tools that truly use AI. The CIPR #AIinPR panel has crowdsourced more than 130 tools used by public relations professionals using the five-point scale,” says the CIPR.

The overt adverse many have suspected from AI is the potential disruption of employment, given AI enables machines to solve issues that would previously have required social skills and other human capabilities. However, AI will realistically create more jobs and better yet, evolve the contemporary employment norms. AI may be able to create press releases, send media lists or generate a media advisory; however, no matter how advanced technology becomes, human to human communication is irreplaceable. AI can’t take a client to lunch, make a persuasive phone call or brainstorm new ideas. AI will inevitably change the culture of media relations; nevertheless, many are not on board.

The other questionable aspect of AI is the ethics behind it. Capgemini recently conducted a global study regarding “consumer attitudes to the ethical use of AI and businesses’ confidence in whether they are delivering ethical AI systems.” Capgemini claims that the UK “had the most confidence out of all countries surveyed that their organisation’s AI systems are transparent.” The UK placed the highest, but even so, only 36% agreed, and that number descended to 28% when questioned whether their systems are “ethical or fair.” According to the CIPR, it is necessary to “beware the hype,” as they further go on to say that “AI vendors make many promises,” many of which are impossible to be fulfilled.

Inevitably, AI may be invasive and will perhaps violate the privacy of people storing any data in the cloud. Given the rate at which data volumes are multiplying, nearly everybody will fall victim to a degree of interference with their personal information. According to the skills guide provided by CIPR, “More data has been created in the past two years than in the entire previous history of the human race. Data is growing faster than ever before, and by the year 2020, about 1.7 megabytes of new information will be created every second for every human being on the planet.” That’s a boatload of data, and AI relies on information like this. Many have already recognized the trouble with such a breach of penetralia, leading the statistic that in total, 79% of UK consumers believe further regulation should be implemented on how companies use AI.

Plans do not seem to be in motion; however, as artificial intelligence becomes more mainstream, it should be assumed that such precautions and ways of use will evolve alongside AI technology itself.

For more from Communicate magazine, sign up for the Communicate newsletter here and follow us on Twitter @Communicatemag.